A cumulant is defined via the cumulant generating function $$ g(t)\stackrel{\tiny def}{=} \sum_{n=1}^\infty \kappa_n \frac{t^n}{n!},$$ where $$ g(t)\stackrel{\tiny def}{=} \log E(e^{tX}). $$ Cumulants have some nice properties, including additivity- that for statistically independent variables $X$ and $Y$ we have $$ g_{X+Y}(t)=g_X(t)+g_Y(t) $$ Additionally, in a multivariate setting, cumulants go to zero when variables are statistically independent, and so generalize correlation somehow. They are related to moments by Moebius inversion. They are a standard feature in undergraduate probability courses because they feature in a simple proof of the Central Limit Theorem (see for example here).

So the cumulants are given by a formula and have a list of good properties. A cumulant is clearly a fundamental concept, but I'm having difficulty figuring out what it is actually measuring, and how it is more than just a computational convenience.

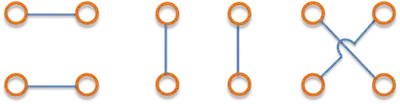

Question: What are cumulants actually measuring? What is their conceptual meaning? Are they measuring the connectivity or cohesion of something?

I apologize that this question is surely completely elementary. I'm in low dimensional topology, and I'm having difficulty wrapping my head around this elementary concept in probability; Google did not help much. I'm vaguely imagining that perhaps they are some kind of measure of "cohesion" of the probability distribution in some sense, but I have no idea how.