I would like to preface this long answer by a few philosophical remarks. As noted in the original posting, proofs play multiple roles in mathematics: for example, they assure that certain results are correct and give insight into the problem.

A related aspect is that in the course of proving an intuitively obvious statement, it is often necessary to create theoretical framework, i.e. definitions that formalize the situation and new tools that address the question, which may lead to vast generalizations in the course of the proof itself or in the subsequent development of the subject; often it is the proof, not the statement itself, that generalizes, hence it becomes valuable to know multiple proofs of the same theorem that are based on different ideas. The greatest insight is gained by the proofs that subtly modify the original statement that turned out to be wrong or incomplete. Sometimes, the whole subject may spring forth from a proof of a key result, which is especially true for proofs of impossibility statements.

Most examples below, chosen among different fields and featuring general interest results, illustrate this thesis.

- Differential geometry

a. It had been known since the ancient times that it was impossible to create a perfect (i.e. undistorted) map of the Earth. The first proof was given by Gauss and relies on the notion of intrinsic curvature introduced by Gauss especially for this purpose. Although Gauss's proof of Theorema Egregium was complicated, the tools he used became standard in the differential geometry of surfaces.

b. Isoperimetric property of the circle has been known in some form for over two millennia. Part of the motivation for Euler's and Lagrange's work on variational calculus came from the isoperimetric problem. Jakob Steiner devised several different synthetic proofs that contributed technical tools (Steiner symmetrization, the role of convexity), even though they didn't settle the question because they relied on the existence of the absolutely minimizing shape. Steiner's assumption led Weierstrass to consider the general question of existence of solutions to variational problems (later taken up by Hilbert, as mentioned below) and to give the first rigorous proof. Further proofs gained new insight into the isoperimetric problem and its generalizations: for example, Hurwitz's two proofs using Fourier series exploited abelian symmetries of closed curves; the proof by Santaló using integral geometry established more general Bonnesen inequality; E.Schmidt's 1939 proof works in $n$ dimensions. Full solution of related lattice packing problems led to such important techniques as Dirichlet domains and Voronoi cells and the geometry of numbers.

- Algebra

a. For more than two and a half centuries since Cardano's Ars Magna, no one was able to devise a formula expressing the roots of a general quintic equation in radicals. The Abel–Ruffini theorem and Galois theory not only proved the impossibility of such a formula and provided an explanation for the success and failure of earlier methods (cf Lagrange resolvents and casus irreducibilis), but, more significantly, put the notion of group on the mathematical map.

b. Systems of linear equations were considered already by Leibniz. Cramer's rule gave the formula for a solution in the $n\times n$ case and Gauss developed a method for obtaining the solutions, which yields the least square solution in the underdetermined case. But none of this work yielded a criterion for the existence of a solution. Euler, Laplace, Cauchy, and Jacobi all considered the problem of diagonalization of quadratic forms (the principal axis theorem). However, the work prior to 1850 was incomplete because it required genericity assumptions (in particular, the arguments of Jacobi et al didn't handle singular matrices or forms. Proofs that encompass all linear systems, matrices and bilinear/quadratic forms were devised by Sylvester, Kronecker, Frobenius, Weierstrass, Jordan, and Capelli as part of the program of classifying matrices and bilinear forms up to equivalence. Thus we got the notion of rank of a matrix, minimal polynomial, Jordan normal form, and the theory of elementary divisors that all became cornerstones of linear algebra.

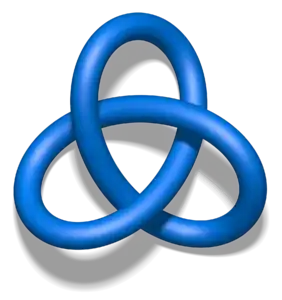

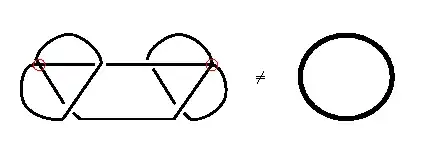

- Topology

a. Attempts to rigorously prove the Euler formula $V-E+F=2$ led to the discovery of non-orientable surfaces by Möbius and Listing.

b. Brouwer's proof of the Jordan curve theorem and of its generalization to higher dimensions was a major development in algebraic topology. Although the theorem is intuitively obvious, it is also very delicate, because various plausible sounding related statements are actually wrong, as demonstrated by the Lakes of Wada and the Alexander horned sphere.

- Analysis The work on existence, uniqueness, and stability of solutions of ordinary differential equations and well-posedness of initial and boundary value problems for partial differential equations gave rise to tremendous insights into theoretical, numerical, and applied aspects. Instead of imagining a single transition from 99% ("obvious") to 100% ("rigorous") confidence level, it would be more helpful to think of a series of progressive sharpenings of statements that become natural or plausible after the last round of work.

a. Picard's proof of the existence and uniqueness theorem for a first order ODE with Lipschitz right hand side, Peano's proof of the existence for continuous right hand side (uniqueness may fail), and Lyapunov's proof of stability introduced key methods and technical assumptions (contractible mapping principle, compactness in function spaces, Lipschitz condition, Lyapunov functions and characteristic exponents).

b. Hilbert's proof of the Dirichlet principle for elliptic boundary value problems and his work on the eigenvalue problems and integral equations form the foundation for linear functional analysis.

c. The Cauchy problem for hyperbolic linear partial differential equations was investigated by a whole constellation of mathematicians, including Cauchy, Kowalevski, Hadamard, Petrovsky, L.Schwartz, Leray, Malgrange, Sobolev, Hörmander. The "easy" case of analytic coefficients is addressed by the Cauchy–Kowalevski theorem. The concepts and methods developed in the course of the proof in more general cases, such as the characteristic variety, well-posed problem, weak solution, Petrovsky lacuna, Sobolev space, hypoelliptic operator, pseudodifferential operator, span a large part of the theory of partial differential equations.

- Dynamical systems

Universality for one-parameter families of unimodal continuous self-maps of an interval was experimentally discovered by Feigenbaum and, independently, by Coullet and Tresser in the late 1970s. It states that the ratio between the lengths of intervals in the parameter space between successive period-doubling bifurcations tends to a limiting value $\delta\approx 4.669201 $ that is independent of the family. This could be explained by the existence of a nonlinear renormalization operator $\mathcal{R}$ in the space of all maps with a unique fixed point $g$ and the property that all but one eigenvalues of its linearization at $g$ belong to the open unit disk and the exceptional eigenvalue is $\delta$ and corresponds to the period-doubling transformation. Later, computer-assisted proofs of this assertion were given, so while Feigebaum universality had initially appeared mysterious, by the late 1980s it moved into the "99% true" category.

The full proof of universality for quadratic-like maps by Lyubich (MR) followed this strategy, but it also required very elaborate ideas and techniques from complex dynamics due to a number of people (Douady–Hubbard, Sullivan, McMullen) and yielded hitherto unknown information about the combinatorics of non-chaotic quadratic maps of the interval and the local structure of the Mandelbrot set.

- Number theory

Agrawal, Kayal, and Saxena proved that PRIMES is in P, i.e. primality testing can be done deterministically in polynomial time. While the result had been widely expected, their work was striking in at least two respects: it used very elementary tools, such as variations of Fermat's little theorem, and it was carried out by a computer science professor and two undergraduate students. The sociological effect of the proof may have been even greater than its numerous consequences for computational number theory.

Why?' is natural and important in its own right. Curiosity appears to be built in to us. 'Why?' drives a significant portion of the sciences and humanities and everyday life. It would be hard to deny that our active desire to satisfy this inbuilt curiosity is at least partially responsible for human advance (whatever that is). For me, trying to find specific examples where having a proofsaves lives' cheapens the whole process. – Robby McKilliam Sep 04 '10 at 00:26