Teaching group theory this semester, I found myself laboring through a proof that the sign of a permutation is a well-defined homomorphism $\operatorname{sgn} : \Sigma_n \to \Sigma_2$. An insightful student has pressed me for a more illuminating proof, and I'm realizing that this is a great question, and I don't know a satisfying answer. There are many ways of phrasing this question:

Question: Is there a conceptually illuminating reason explaining any of the following essentially equivalent statements?

The symmetric group $\Sigma_n$ has a subgroup $A_n$ of index 2.

The symmetric group $\Sigma_n$ is not simple.

There exists a nontrivial group homomorphism $\Sigma_n \to \Sigma_2$.

The identity permutation $(1) \in \Sigma_n$ is not the product of an odd number of transpositions.

The function $\operatorname{sgn} : \Sigma_n \to \Sigma_2$ which counts the number of transpositions "in" a permutation mod 2, is well-defined.

There is a nontrivial "determinant" homomorphism $\det : \operatorname{GL}_n(k) \to \operatorname{GL}_1(k)$.

….

Of course, there are many proofs of these facts available, and the most pedagogically efficient will vary by background. In this question, I'm not primarily interested in the pedagogical merits of different proofs, but rather in finding an argument where the existence of the sign homomorphism looks inevitable, rather than a contingency which boils down to some sort of auxiliary computation.

The closest thing I've found to a survey article on this question is a 1972 note "An Historical Note on the Parity of Permutations" by TL Bartlow in the American Mathematical Monthly. However, although Bartlow gives references to several different proofs of these facts, he doesn't comprehensively review and compare all the arguments himself.

Here are a few possible avenues:

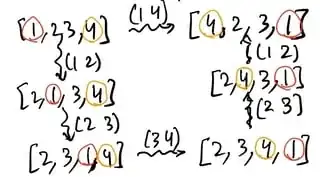

$\Sigma_n$ is a Coxeter group, and as such it has a presentation by generators (the adjacent transpositions) and relations where each relation respects the number of words mod 2. But just from the definition of $\Sigma_n$ as the group of automorphisms of a finite set, it's not obvious that it should admit such a presentation, so this is not fully satisfying.

Using a decomposition into disjoint cycles, one can simply compute what happens when multiplying by a transposition. This is not bad, but here the sign still feels like an ex machina sort of formula.

Defining the sign homomorphism in terms of the number of pairs whose order is swapped likewise boils down to a not-terrible computation to see that the sign function is a homomorphism. But it still feels like magic.

Proofs involving polynomials again feel like magic to me.

Some sort of topological proof might be illuminating to me.

Another counterargument: A left adjoint would have to preserve initial objects, but the category of finite sets and injections has an initial object (the empty set has a unique injection into every set), while the category of 2-element sets and injections has no initial object (any 2-element set has two different injections to any other 2-element set).

– Sridhar Ramesh Mar 10 '22 at 15:46