Still, it seems worth recording what you know. I think at this point the question should be treated as "can we figure out anything?" – David E Speyer

Well, OK. Trying to figure out anything is exactly what we are now doing with Zachary Chase, and below is a small observation.

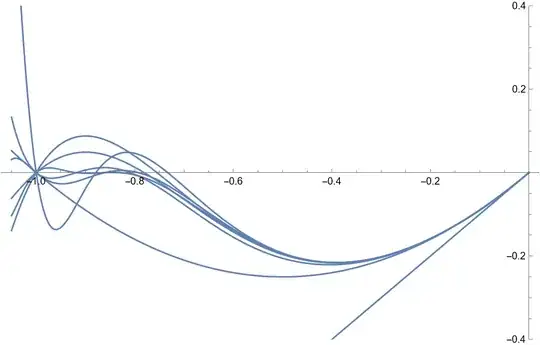

Let $\alpha>0$. Suppose that $n$ is even and we want to construct a polynomial $P_a(x)=\sum_{j=0}^n a_jx^j$ with $a_0=a_n=\alpha$, $a_j\in[0,1]$ for $1\le j\le n-1$ having roots $-1,-x,-x^2,\dots,-x^m$ where $x\in(0,1)$ is some number close to $1$.

It is possible if and only if the convex set $$

E=\{(a_0,a_n,P_a(x^k), 0\le k\le m):\\ a_0,a_n\in \mathbb R, a_j\in[0,1], 1\le j\le n-1\}\subset \mathbb R^{m+3}

$$

contains the point $z=(\alpha,\alpha,0,\dots,0)$. So suppose that it does not. Then there is a non-trivial linear functional

$\psi$ on $\mathbb R^{m+1}$ that is non-negativeon $E-z$, i.e., we can find some coefficients, not all $0$ such that

$$

u(a_0-\alpha)+v(a_n-\alpha)+\sum_{k=0}^m w_kP_a(x_k)\ge 0

$$

for every admissible choice of the coefficient vector $a$.

Introduce the polynomial $Q(z)=\sum_{k=0}^m w_k z^k$. Then the condition above can be rewritten as

$$

-u\alpha-v\alpha+(u+Q(1))a_0+(v+Q(x^n))a_n+\sum_{j=1}^{n-1}(-1)^jQ(x^j)a_j\ge 0\,.

$$

Now, since $a_0$ and $a_n$ are free to run over the entire real line, we must have $u+Q(1)=v+Q(x^n)=0$. As to the rest of the expression, its minimum equals

$$

U=\sum_{j=1}^{n-1} \min[(-1)^jQ(x^j),0]\,.

$$

Thus we must have

$$

\alpha(Q(1)+Q(x^n))+U\ge 0

$$

for some not identically $0$ polynomial $Q$ of degree at most $m$.

Now, when $x$ is really close to $1$, the points $x_j$, $j=1,\dots,n-1$ make an almost equispaced net on the interval $I=[x^n,1]$. Let $\mu=|Q(z)|=\max_I|Q|$. Then, by Markov's inequality, $|Q'|\le\frac 2{|I|}m^2\mu$ on $I$, so there is an interval of length $\frac{|I|}{4m^2}$ containing $z$ on which $Q$ preserves sign and is at least $\mu/2$ in absolute value. Since the powers $x_j$ are separated by about $|I|/n$, this interval contains about $cn/m^2$ powers $x^j$ with odd and even $j$. Choosing the parity appropriately, we get $U\le -c\frac{n}{m^2}\mu$.

On the other hand, $Q(1)+Q(x^n)\le 2\mu$. Hence we run into a contradiction when $2\alpha m^2\le cn$.

The conclusion: For every fixed $\alpha\ge 1$ and even $n$, there exists a polynomial $P_a(x)$ with $a_0=a_n=\alpha$, $a_j\in[0,1]$ ($j=1,\dots,n-1$) having $m$ distinct roots on $[-1,0)$, provided that $m^2\le c\alpha^{-1} n$.

It means that the non-negativity of the coefficients doesn't impose any substantial additional restrictions on the number of roots compared to the boundedness alone and the whole issue is the discretization from $[0,1]$ to $\{0,1\}$.

Edit I'll continue dumping obvious observations here. We'll now construct a polynomial with coefficients $0$ and $1$ having about $\frac{\log^2 n}{\log\log n}$ roots. While by itself this lower bound is rather pathetic, it still means that one should, probably, concentrate for a while on driving the lower bound up rather than the upper bound down.

The key observation is that $\sqrt[3]2<\frac 43$ by Bernoulli, so

$$

-1+\sqrt[3]{\frac 12}+\frac 12-\left(\frac 12\right)^3-\left(\frac 12\right)^9

\\

>-1+\frac 34+\frac 12-\frac 18-\frac 18=0\,.

$$

The immediate conclusion is that if $a=(-1,-1,1,1,-1,-1,1,1,\dots)$ and $p_j$ is an increasing sequence of positive integers such that $p_{j+1}\ge 3p_j$ for all $j$, then the polynomial

$$

1+\sum_{k=1}^u a_kx^{p_k}

$$

has at least about $u/2$ sign changes on $(0,1)$. To turn it into $0,1$ polynomial with roots on $(-1,0)$, we need also to ensure that the parity of $p_j$ agrees with the sign of $a_j$, of course.

Now fix the (large) target degree $n$. Let $q,u,v$ be positive integers. Let $I_k=[3\cdot 6^{k-1} q, 6^k q]$ ($k=1,\dots,u$).

We will now choose $uv$ pairwise distinct integers $p_{ij}$ ($i=1,\dots,v, j=1,\dots,u$) so that for fixed $i$ one has $p_{ij}\in I_j$ for all $j$ and $p_{ij}$ has appropriate parity to be used in the above construction. We will also require that every positive integer $p$ can be written as the sum of at most $v$ integers $p_{ij}$ in at most one way. To ensure that such choice is possible by the mindless "just-take-what-is-still-available" algorithm, it is enough to require that $(uv)^{2v-1}<q$.

Now just put $P_i(x)=1+\sum_{j=1}^u x^{p_{ij}}$ and $P(x)=\prod_{i=1}^v P_i(x)$. Then $P$ is a $0,1$ polynomial of degree $\le 6^u qv$ and about $uv/2$ roots. To keep the degree under $n$ and to satisfy the previous condition, we choose $q\approx\sqrt n$, $u\approx c\log n$, $v\approx c\frac{\log n}{\log\log n}$ with sufficiently small $c>0$.

To be completely honest, I should also ensure that the roots of different $P_i$ are different too, but with that much freedom in choosing $p_{ij}$ that is rather trivial, so I'll leave it as an exercise to the interested readers.

Edit 2: Some more "Mathematische Banalen". This time we will show the existence of a polynomial with coefficients $0,\pm 1$ having $c\frac{\sqrt n}{\log n}$ zeroes on $[0,1]$.

This still falls a bit short of $\sqrt n$, but is way better than $n^{1/4}$ David found in the literature for this case.

We start with a few observations about such polynomials. First, if $P$ is such a polynomial of degree $n$, then

$$

\int_0^1 |P(x)|\,dx\ge n^{-C\sqrt n}

$$

for large $n$ unless $P$ is identically $0$.

Indeed, if we use the same polynomial $Q$ and the differential operators $D_\lambda$, we will see that applying $D_{\lambda_s}$ with various $\lambda_s\in[0,n]$ to $P$ at most $C\sqrt n$ times, we'll get a polynomial $\widetilde P$ in which the first non-zero coefficient is $\pm 1$ and the sum of the absolute value of all other coefficients is below $1/2$. Then $\int_0^1|\widetilde P(x)|\,dx\ge\frac 12\int_0^1 x^n\,dx=\frac 1{2(n+1)}$.

However, due to the Markov's inequality $\|P'\|_\infty\le 2n^2\|P\|_\infty$, we have that the $L^1$ and the $L^\infty$ norms of $P$ are equivalent up to a factor $Cn^2$. Moreover, $|P|\ge \frac 12\|P\|_\infty$ on an interval of length $cn^{-2}$ around the point of the maximum of $P$. Thus, we have $\|P'\|_1\le Cn^4\|P\|_1$ and $\|P\|_\infty\le Cn^2 \int_{[0,1]\setminus E}|P|$ for every set $E$ of meaure $|E|\le cn^{-2}$.

The first conclusion shows that every application of $D_\lambda$ increases the $L^1$ norm of a polynomial of degree $n$ at most $Cn^4$ times, which immediately implies our first observation.

Now consider all polynomials $R$ of degree $n$ with coefficients $0,1$ and for each of them kompute their first $m+1$ moments $\int_0^1 R(x)x^k\,dx$ ($k=0,\dots,m$). Those are numbers in $[-n-1,n+1]$. Using the pigeonhole principle, as usual, and shamelessly exploiting the fact that the difference set is exactly what we need, we find a polynomial $P$ with coefficients $0,\pm 1$ that is not identically $0$ but satisfies

$$

\left|\int_0^1 P(x)x^k\,dx\right|\le 2(n+1)2^{-\frac nm}\,.

$$

for all $k=0,\dots,m$.

Now assume that $P$ has only $u<m$ distinct roots $r_j$ on $[0,1]$ at which a crossing occurs. Consider $q(x)=\prod_j(x-r_j)$. That is a polynomial of degree $u<m$ with the sum of absolute values of its coefficients at most $2^m$. Thus

$$

\left|\int_0^1 P(x)q(x)\,dx\right|\le 2(n+1)2^{-\frac nm}2^m\,.

$$

On the other hand, $Pq$ preserves sign and $|q|\ge (c'n^{-2})^m$ outside a set $E\subset \mathbb R$ of measure $|E|<cn^{-2}$ (Cartan's lemma). Hence the LHS equals

$$

\int_0^1|P||q|\ge\int_{[0,1]\setminus E}|P||q|\ge (c'n^{-2})^m cn^{-2}\|P\|_\infty\ge (c'n^{-2})^m cn^{-2} n^{-C\sqrt n}\,,

$$

so if

$$

(c'n^{-2})^m cn^{-2} n^{-C\sqrt n}>2(n+1)2^{-\frac nm}2^m\,,

$$

which happens for $m=c\frac{\sqrt n}{\log n}$, we get a contradiction.

Next Edit:

Let's now construct (or, rather, prove the existence of) a non-zero polynomial $P$ with coefficients $0,\pm 1$ of degree at most $n$ that has at least $c\sqrt n$ roots on $(0,1)$. This construction will, finally, justify my casual remark in the beginning of this long discussion (which I still hope to continue) and show that my memory, albeit failing, can still be occasionally trusted somewhat. If you ask me when, where, and by whom this argument was first invented, I have no idea.

We'll proceed as before but do everything way more carefully. First, we shall show that if $a>0$ is a small number, then every polynomial $P(z)=\sum_{k=0}^n a_kz^k$ with coefficients $a_k\in [-1,1]$ and $a_0=\pm 1$ satisfies $\|P\|_{L^0(I)}\ge \exp(-C/a)$ where $I=[1-2a,1-a]$ and for a non-negative function $f$ on an interval $J$, $\|f\|_{L^0(J)}=\exp\left[\frac{1}{|J|}\int_J\log| f|\right]$ is the geometric mean of $f$ on $J$.

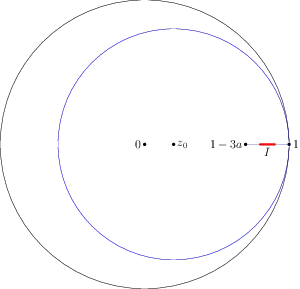

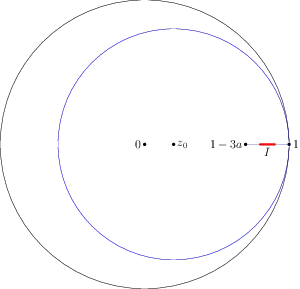

This is achieved by considering the domain $\Omega$ that is a disk centered at some point $z_0\in(0,\frac 13)$ (say, $z_0=\frac 16$) of radius $1-|z_0|$ with a slit $[1-3a,1]$ (the blue circle on the figure below).

Applying Jensen's inequality, we see that

$$

0 =\log|P(0)|\le\int_{\partial\Omega}\log|P|d\omega

$$

where $\omega$ is the harmonic measure on $\partial\Omega$ with respect to $0$. Now we first estimate the integral of $\log_+|P|$ using the trivial bound $|P(z)|\le\frac 1{1-|z|}$. We split $\partial\Omega$ into the slit part $S$ and the circle part $C$. Note that the conformal mapping of $\Omega$ to the unit circle is given by an explicit formula, so the density of $\omega$ can be found exactly, but I still prefer a back of the envelope computation that isn't algebraicly heavy even if it is a bit lengthier.

First, we consider the harmonic function $u(z)=\log\frac 1{|1-z|}$ in $\Omega$. we have

$$

0=u(0)=\int_S\log\frac 1{|1-z|}\,d\omega(z)+\int_C\log\frac 1{|1-z|}\,d\omega(z)\,.

$$

Since $\log\frac 1{|1-z|}\ge -\log 2$ on $C$, we conclude that

$$

\int_S\log_+|P|\,d\omega\le \int_S\log\frac 1{1-|z|}\,d\omega(z)

\\

=\int_S\log\frac 1{|1-z|}\,d\omega(z)\le\log 2\,.

$$

Now note that on $C$, the harmonic measure $\omega$ is dominated by the harmonic measure for the disk without a slit, which has bounded density with respect to the Lebesgue measure on the circumference, so the integral of $\log\frac{1}{1-|z|}$ with respect to $\omega$ is uniformly bounded by some constant depending on (fixed) $z_0$ but not on the size of the slit. Thus, $\int_{\partial\Omega}\log_+|P|\,d\omega\le C$ independently of $a$ and, therefore, $\int_I \log_-|P|\,d\omega\le \int_{\partial\Omega}\log_-|P|\,d\omega\le C$ as well.

Now we need a more clear idea of what $\omega$ is on $I$. First, map the disk conformally to the upper half-plane so that $0$ is mapped to $i$ and $1$ to $0$, say. Then the slit $S$ will be mapped to $[0,hi]$ for $h$ comparable to $3a$ and the mapping will be bi-Lipshitz on the slit and $\omega$ will be mapped to the harmonic measure $\omega'$ in the half-plane with the new slit.

Now, we use the usual $w\mapsto\sqrt{w^2+h^2}$. Then we'll get $d\omega'(w)\approx \frac{|w|}{\sqrt{|w^2+h^2|}}|dw|$. When $z$ is in $I$, the corresponding point $w$ is in the "middle part" of $[0,hi]$, so the factor in front of $|dw|$ is comparable to $1$. Thus, on $I$, we have $d\omega(z)\approx |dz|$ and we conclude that $\int_{I}\log_-|P(x)|\,dx\ge -C$, i.e., $\|P\|_{L^0(I)}\ge \exp[-C/|I|]=\exp[-C/a]$.

If $a_0=0$ and $a_k\in\{0,\pm 1\}$, consider the least $m\in[0,n]$ for which $a_m=\pm 1$. The factor $x^m\ge(1-2a)^n\ge \exp[-3an]$ on $I$, so in this case

$$

\|P\|_{L^0(I)}\ge\exp\left[-\tfrac Ca-3an\right]\,.

$$

We shall now fix $a=\frac 1{\sqrt n}$, so

$$

\|P\|_{L^0(I)}\ge\exp\left[-C\sqrt n\right]\,.

$$

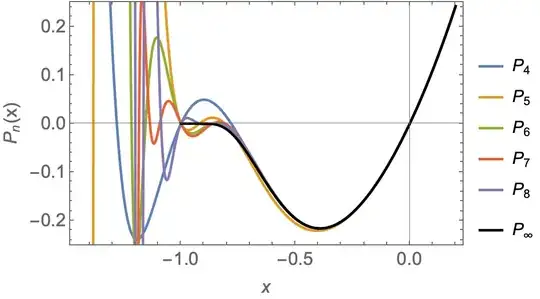

This part has been definitely known to Borwein and Erdelyi. Now we play the same game as before but only on the interval $I$. Formally, for a polynomial $P$, we define

$$

\widetilde P(t)=P(1-a-at), \qquad t\in[0,1]\,.

$$

The above result states now that for every non-zero $P$ with coefficients $0,\pm 1$, we have

$$

\|\widetilde P\|_{L^0([0,1])}\ge \exp[-C\sqrt n]\,.

$$

Now, noting that $\|\widetilde p\|_{L^\infty([0,1])}\le n$ for any $p$ with coefficients $0,1$ and using the pigeonhole as before, we can find a non-zero polynomial $P$ with coefficients $0,\pm 1$ such that

$$

\left|\int_0^1 \widetilde P(t) t^\ell\,dt\right|\le n2^{-n/m},\qquad \ell=0,1,\dots,m-1\,.

$$

Assuming that $\widetilde P$ has only $m-1$ (or fewer) sign changes on $[0,1]$ (i.e., that $P$ has fewer than $m$ zeroes on $I$), we again construct $Q(t)=\prod_j(t-t_j)$ as before and note that $\widetilde PQ$ preserves sign on $[0,1]$. Also, $\|Q\|_{L^0([0,1])}\ge\exp[-Cm]$ (each factor $|t-t_j|$ has geometric mean uniformly bounded from below. So, we get the chain of inequalities

$$

\exp[-C\sqrt n-Cm]\le \|\widetilde P Q\|_{L^0([0,1])}\le\|\widetilde P Q\|_{L^1([0,1])}

\\

=\left|\int_0^1 \widetilde P Q\right|\le 2^m n2^{-n/m}\,,

$$

and when $m=c\sqrt n$ with small enough $c>0$, we get a contradiction.

Next Edit

The construction of a polynomial with coefficients $0,\pm 1$ having $m$ roots on $(-1,0)$ of degree $Cm^2$ allows one also to construct a polynomial with coefficients $0,1$ of degree $n$ with $c\log^2 n$ roots on $(-1,0)$ giving a slight improvement from the previous "trivial bound" $c\log^2 n/\log\log n$.

To carry the construction out, note first of all that when looking for the sign changes of $P$ in the construction for the $0,\pm 1$ case, we can ignore the humps of height $\exp(-C_1 m)$ with large constant $C_1$ because they can be responsible only for a tiny portion of the $L^1-norm$ of $\widetilde PQ$ ($e^{-C_1 m}$ is much less than $e^{-Cm-C\sqrt{Cm^2}}$). Thus, a polynomial $P$ has not only $m$ roots, but also an alternance of size $m$ and of height $e^{-C_1 m}$.

Now take $P_1(x)=P(x^N)$. Then this alternance occurs on the interval $J=[-1,-1+\frac 1N]$ (actually even a bit shorter one, but it is irrelevant). Consider the polynomial $T(x)=\prod_{k:3^k\le \sqrt N}(1+x^{3^k})-1$. Note that $T$ has coefficients $0,1$ and $|T(x)+1|\le N^{-c\log N}=e^{-c\log^2 N}$ on $J$. Thus, if this number is below $e^{-C_1 m}/(Cm^2)$, we can replace every negative power $-x^k$ in $P_1$ by $x^kT(x)$ without disturbing the alternance too much.

That happens exactly when $m<c_1\log^2 N$. The rest should be obvious.

Note that this construction is based exactly on David's idea to take whatever we have and to replace negative coefficients by something else afterwards. This particular realization of the idea raises the degree way too much, however. Any suggestions how to do it better? Remember that we cannot raise the multiplicity of zero at $-1$ substantially (see our discussion with Peter Mueller), so some other approach is needed.