I know this question has been asked before, but when I was looking into tensors for the first time I never found a good straightforward explanation of what a tensor is and why it is important. I tried to wrap my head around the topic and express it in the simplest way that requires a minimum amount of math that should be accessible to someone at first year college, and this is what I was able to come up with:

Start from vectors: the concept that a tuple [3, 4] represents a point on a coordinate system on a 2D plane should be pretty easy to grasp. This tuple is a vector and can be thought as an arrow starting from the origin and arriving at the point.

Typically, one is used to orthonormal systems, so you can introduce a way to calculate the length of the vector thanks to Pythagoras' theorem, that is length = $\sqrt{3^2 + 4^2}$

Now realize that [3, 4] just means "three units on the x axis and 4 units on the y axis", so the vector can be written as 3[1, 0] + 4[0, 1]. This introduces the concept of basis vectors, and the fact that every vector on the 2D plane is a linear combination of the basis vectors.

Now here comes the question: must the basis vectors be orthogonal and unitary in size? And the answer is no, you can choose a basis with non-unitary vectors and that may be at an angle (as long as the basis vectors are not linearly dependent from each other).

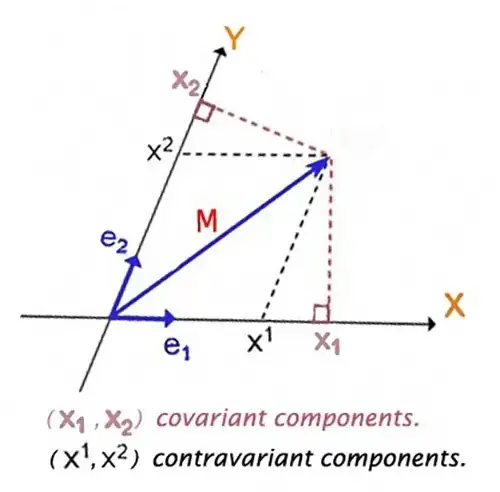

The following question is: if you have oblique vectors that are not at right angles, how do you represent vector coordinates? Do you pick ones that result from parallel lines to the tip of the vector, or do you take the ones that result in right angles from the tip of the vector

- The answer is: both work. This naturally introduces the concept of covariance, contravariance and dual space.

- And this also introduces a new problem: if the basis vectors are oblique, you cannot use the Pythagorean theorem to calculate the length of the vector, so how do you calculate it? And the answer is that you don't sum the square of the components, but you sum the products of the covariant and contravariant components. That is, from the image above, length of M is $\sqrt{x^1 x_1 + x^2 x_2}$

- Finally, we get to the tensors. It is clear that an orthonormal basis is just a very special case, where the general case can be an oblique basis with non-unitary vectors. "Regular vector notation" is simply not enough to represent and operate on all the quantities that you need to manage to make calculations on vector spaces. Given a vector, you need to be able to specify both the covariant components and the contravariant components to calculate quantities such as velocity etc. Tensors offer you the possibility to do exactly that: to have an object that can carry information about both covariant AND contravariant components, and it allows you to express concisely quantities like the vector's length etc. Obviously, in an orthonormal basis the covariant and contravariant coordinate coincide, and hence a vector is enough to manage them.

Now, is the above reasoning correct? As much as I have searched in the past for simple explanations like this, I have never found one. Tensors are simply objects that let you represent vectors in any possible basis. They are fundamental when working in various situations, for example in general relativity where spacetime is not a Euclidean space (and basis vectors of different observers are at different angles with each other). The typical description you find: "a tensor is a generalization of a vector: so a tensor of rank 0 is a scalar, a tensor of rank 1 is a vector, 2 is a matrix etc." seems incomplete, or at least it only captures one consequence of tensors (it's like saying that a vector with one component is a scalar, a matrix with one row is a vector, etc., it doesn't tell you anything about these objects). Same for the stress tensor: many use it as an example of what a tensor is, but that's just an application of what a tensor could do, and it does not capture any of the geometrical characteristics I described above.

Given that I'm not an expert in math/physics, my question is really: is the above explanation of a tensor correct / acceptable?