One must remember that SI is defined for ease of use and human familiarity. Natural unit systems have two problems in this regard: it's hard to keep track of the units and therefore avoid trivial mistakes like calling a length a volume or a time a frequency, and the constants involved are either impractically huge or impractically tiny at the human scale, making the units impractically sized as well. This is why SI defines the units it does.

Length and time are kept distinct because the conversion factor between them (the speed of light) is ridiculously huge. A light-second is about three hundred thousand kilometers, which is too big. And while a light-nanosecond is about a foot, a nanosecond itself is too small. As a result, we give the speed of light a unit (meters per second) and a numerical value that allows us to switch easily between the predefined values of second and meter, so we can then throw those definitions away and use the new, lightspeed based one.

And if you think length and time have it bad, remember that mass and energy have the same conversion factor, but squared. A square-light-kilogram is about ninety thousand million million joules, an amount of energy we will never attain except possibly in our wildest fantasies. And trying to go the other way leaves us with a mass unit even smaller than a nanosecond. So instead of using the speed of light to convert between mass and energy, we use the ratio between a meter and a second.

Of course, energy and time are related by Planck's constant, or the "action quantum" (I just made that term up), but it is ridiculously tiny - even more than lightspeed is ridiculously huge. So we do the same thing, we give it a unit (joule second) and a numerical value that allows us to switch easily between the predefined values of joule and second, so we can throw those definitions away and use the new, Planck based one.

Except that it's actually much easier for us to directly measure mass than energy, so we measure a kilogram, multiply it by a meter-per-second squared (not be confused with meter per second-squared) and call that a joule, then throw away the predefined value of a kilogram to use the new, Planck based one (which we finally did in 2019).

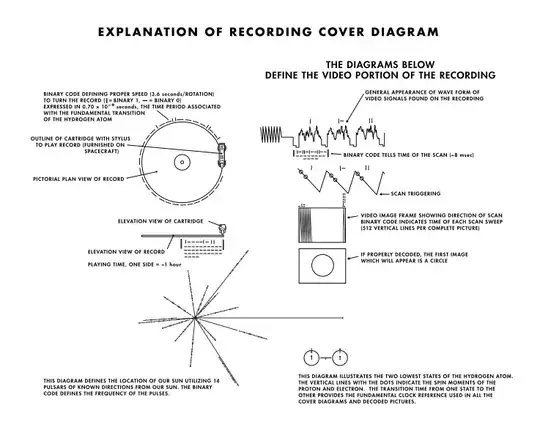

Then there's amperes. The choice to define an electric unit rather than just derive it from $LTM$ (length, time, mass) was because there were multiple ways to do it, unlike, say, energy, which is always $[M][L]^2[T]^{-2}$. Sure, the Gaussian (electrostatic) definition of charge was $[M]^{1/2}[L]^{3/2}[T]^{−1}$, but the electromagnetic definition of charge was $[M]^{1/2}[L]^{1/2}$.

The discrepancy is caused by which relation you consider fundamental. ESU (electrostatic units) starts from Coulomb's law; EMU (electromagnetic units) starts from Ampere's law. These two methods create units of charge that differ by a factor of lightspeed, hence the different units. SI went with Ampere's law since it was easier to measure, hence the choice of ampere over coulomb, but has since decided to count electrons per second, deciding that the fundamental unit is the quantum of charge. This, by the way, is why you don't hear anything about "units of color charge" or "units of flavor charge"; the counts of charge quanta are treated as unitless counts, sidestepping the whole issue.

Similarly, you may have heard that "temperature is just energy". It's not, it's energy per unit entropy. It's just that entropy is fundamentally a description of information, so it can be reduced to a unitless count of "bits" or "degrees of freedom". Likewise, amount of substance is just counting particles; the mole is simply a convenient number to switch between the realm of particles and the realm of

substances. As for luminous intensity, the very concept depends on the sensitivity of the human eye; candelas would not be missed outside of photometry, and indeed aren't.