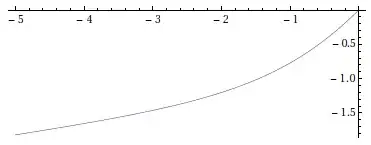

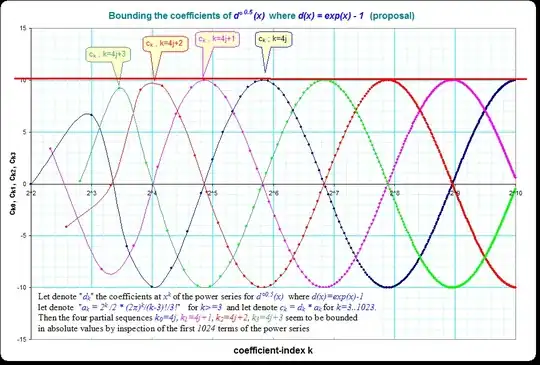

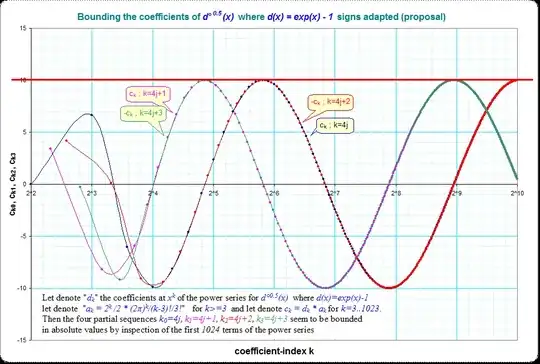

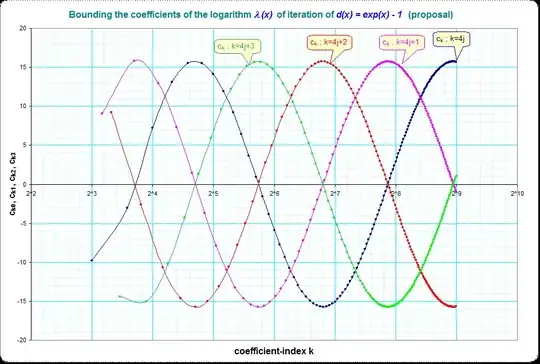

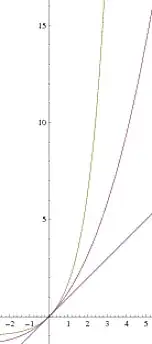

The question is about the function $f(x)$ so that $f(f(x))=\exp (x)-1$.

The question is open ended and it was discussed quite recently in the comment thread in Aaronson's blog here http://scottaaronson.com/blog/?p=263

The growth rate of the function $f$ (as $x$ goes to infinity) is larger than linear (linear means $O(x)$), polynomial (meaning $\exp (O(\log x)))$, quasi-polynomial (meaning $\exp(\exp O(\log \log x))) $ quasi-quasi-polynomial etc. On the other hand the function $f$ is subexponential (even in the CS sense $f(x)=\exp (o(x))$ ), subsubexponential ($f(x)=\exp \exp (o(\log x))$) subsubsub exponential and so on.

What can be said about $f(x)$ and about other functions with such an intermediate growth behavior? Can such an intermediate growth behavior be represented by analytic functions? Is this function $f(x)$ or other functions with such an intermediate growth relevant to any interesting mathematics? (It appears that quite a few interesting mathematicians and other scientists thought about this function/growth-rate.)

Related MO questions:

- solving $f(f(x))=g(x)$

- How to solve $f(f(x)) = \cos(x)$?

- Does the exponential function has a square root

- Closed form functions with half-exponential growth

- $f\circ f=g$ revisited

- The non-convergence of f(f(x))=exp(x)-1 and labeled rooted trees

- The functional equation $f(f(x))=x+f(x)^2$

- Rational functions with a common iterate

- Smoothness in Ecalle's method for fractional iterates.