Given the function $xe^x$ is there a way to solve the functional root, meaning solve for the function that satisfies the equation $f(f(x))=xe^x.$ I know that there may not be one unique solution that satisfies the functional root but in that case how do you find the family of solutions that does? (note, I'm looking for infinitely differentiable functions)

-

3Let's try to do this for x ≥ 0. My general philosophy is, when a function like has a fixed point (as the function h(x) = x exp(x) has at x = 0) is to begin by finding an approximation to the function near the fixed point (in this case the approximation g(x) = x + (1/2)x^2 seems to work). Now for x > 0 apply the inverse function hinv of h, say N times, until its Nth iterate (hinv^N)(x) on xis very close to 0, then apply g to the result, then apply to that result h an equal number (N) of times. Finally, take the limit of this expression (h^N)(g((hinv^N)(x)) as N —> ∞. – Daniel Asimov Aug 30 '23 at 00:48

-

6You should read Alexander, A History of Complex Dynamics. The special thing about $x e^x$ is that the derivative at the fixpoint is exactly $1.$ So the easier methods don't work, and you are stuck with Ecalle's method. The good news is that there is a solution which is real analytic for $x > 0$ which can be extended as a $C^\infty$ solution (but not holomorphic). Anyway, pretty similar to $x^2 + x$ which I do at https://math.stackexchange.com/questions/911818/how-to-obtain-fx-if-it-is-known-that-ffx-x2x/912324#912324 – Will Jagy Aug 30 '23 at 01:17

-

the other book would be KCG, Iterative Functional Equations. https://www.cambridge.org/core/books/iterative-functional-equations/37D010F4F9B5FD44143F6F4EC8FD2807 and I got some help from Milnor, Dynamics in One Complex Variable – Will Jagy Aug 30 '23 at 03:25

-

In my comment above, "an approximation to the function" should have read "an approximation to the iterative square-root function". (And xis —> x is.) When the original function has a linear approximation with derivative D at the fixed point satisfying |D| (|D| - 1) ≠ 0 (unlike h(x) = x exp(x) at x = 0), then Koenigs's theorem guarantees the existence of a square-root function near the fixed point. – Daniel Asimov Aug 30 '23 at 03:49

-

2Related: solving $f(f(x))=g(x)$. See also f(f(x))=exp(x)-1 and other functions "just in the middle" between linear and exponential and the links therein. – Timothy Chow Aug 30 '23 at 12:41

1 Answers

I'd like to give a practical computational technique for solving this and more general problems (but omitting proofs) as formal power series.

Suppose we have formal power series $f(x)=x+O(x^2)$. Use the notation $f^{\circ n}$ to mean $f$ iterated $n$ times, allowing $n$ to also take non-integral values.

Now consider what happens as we vary $n$ around $0$. We have a kind of flow on $\mathbb{R}$. Near $n=0$, $f^{\circ n}$ is approximately the identity function. So we expect $f^{\circ\varepsilon}(x) = x + \varepsilon g(x)+O(\varepsilon^2)$. This is a kind of infinitesimal flow, so we can think of $g(x) \frac \partial {\partial x}$ as a vector field on $\mathbb{R}$. Define the functional logarithm $\mathop{\mathrm{LOG}}(f)$ by $$f^{\circ\varepsilon}(x) = x+\varepsilon \mathop{\mathrm{LOG}}(f)(x) + O(\varepsilon^2)$$ so $g=\mathop{\mathrm{LOG}}(f)$.

The inverse of $\mathop{\mathrm{LOG}}$, call it $\mathop{\mathrm{EXP}}$, is the usual exponential of a vector field that turns an infinitesimal flow into a finite one, ie. $$\mathop{\mathrm{EXP}}(g)=\exp\left( g(x) \frac \partial {\partial x} \right)x$$

With these definitions we have $\mathop{\mathrm{EXP}}(\mathop{\mathrm{LOG}}(f))(x) = f(x)$ and more generally $\mathop{\mathrm{EXP}}(n\mathop{\mathrm{LOG}}(f))(x)=f^{\circ n}(x)$.

We can compute $\mathop{\mathrm{LOG}}(f)$ by analogy with the usual Taylor series for $\log(1+x)$.

Just as we have (for suitable $x$) $$\log(x)=\sum_{i=1}^\infty-(-1)^i\frac{u^i}{i}$$ where $u=x-1$, it is possible to show (after some work) that $$\mathop{\mathrm{LOG}}(f)=-\sum_{i=1}^\infty(-1)^i\frac{j^{\circ i}(x)}{i}$$ where $j(g)=g\circ f-g$.

$\mathop{\mathrm{EXP}}$ is just the usual exponential of a vector field so it's given by $$\mathop{\mathrm{EXP}}(g)(x) = \sum_{i=0}^\infty \frac{1}{i!} \left(g(x) \frac \partial {\partial x} \right)^i x.$$

This now directly leads to a calculational method in Mathematica, say.

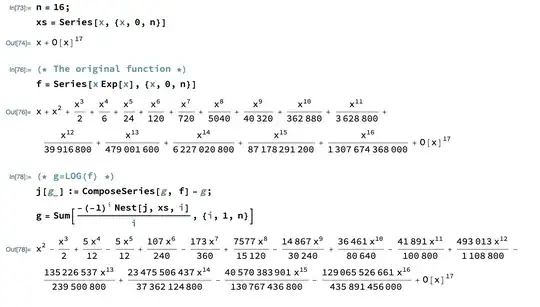

First the computation of the functional logarithm. Note how it's just one line in Mathematica.

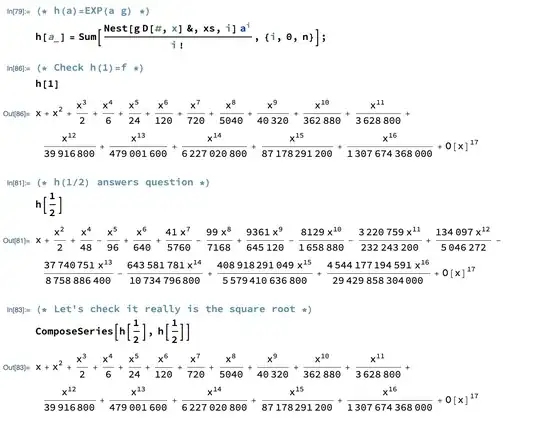

Now exponentiate back up again, solving the OP's problem. Note again how the exponential is just one line.

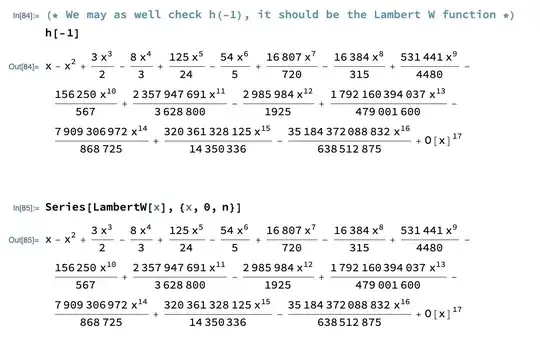

As a bonus, our method gives us the series for the Lambert W function:

BTW Instead of computing the functional $\log$ via a series we could have computed functional square root directly using series. But I like this method as it solves a wide family of related problems.

PS I've given no thought to the convergence of any of these series.

- 11,922

- 11

- 81

- 119

- 8,086

-

The most important trick here is that $j$ is linear in its argument, despite involving composition, because it composes on the right. I learnt this from MO but can't find the answer I learnt it from. – Dan Piponi Aug 31 '23 at 00:10

-

for what it's worth, I don't believe your series for the half iterate is allowed to have nonzero radius of convergence. See my question and self answer, https://mathoverflow.net/questions/45608/does-the-formal-power-series-solution-to-ffx-sin-x-converge

https://mathoverflow.net/questions/45608/does-the-formal-power-series-solution-to-ffx-sin-x-converge/46765#46765

– Will Jagy Aug 31 '23 at 19:14 -

:(

But that question does have the right-multiplication trick I've been searching for: https://mathoverflow.net/a/45643/1233

– Dan Piponi Aug 31 '23 at 20:54 -

Similar to another post here involving the functional square root of $e^x$ , even if the series has zero radius of convergence it might have a suitable borel or nachbin Re summation…. It’s probably still worth exploring that – Sidharth Ghoshal Sep 25 '23 at 15:15